A common roadblock RDMA developers face is the need for access to required hardware. With multiple types of RDMA fabrics, each requiring specific kinds of Network Interface Cards (NICs) to support them, a growing “paywall” blocks developers from testing their RDMA software. The Fabric Software Development Platform (FSDP) provided by the OpenFabrics Alliance (OFA) was created to support RDMA developers struggling with this issue by granting access to a cluster of machines that host RDMA-capable hardware.

Since September 2021, I have been working at the University of New Hampshire InterOperability Lab (UNH-IOL) on our Open Source support team. My first project was to learn about (and later support) the OpenFabrics Alliance (OFA), an open-sourced project centered around accelerating the development and adoption of advanced network fabrics. My primary goal for this project evolved into maintaining the FSDP cluster, including regular upkeep of the hardware and software it provides. This led me to this past April when I had the pleasure of presenting at the 2024 OFA Workshop on the topic of said cluster and how to take advantage of its features. Presenting like this was a great opportunity that provided personal discoveries surrounding public speaking and technical presentations, but it also allowed me to realize exactly how this problem affects people. In one specific instance (which we’ll discuss more later), it even blocked an individual developer from writing RDMA software for multiple years, which helped me put the value of the FSDP cluster into perspective. Let me dive further into what this cluster looks like and how the common developer can take advantage of it.

One aspect of the development cycle that has plagued software engineers since the beginning is testing. While testing in general is crucial, diverse testing, which covers all common code paths/use cases, is a necessity. Software maintainers want to ensure that your modifications to the code-base function correctly before merging them into a release, which will be deployed to production environments. This goal for a full/complete testing suite, in terms of Remote Direct Memory Access (RDMA) feature testing, necessitates not just adding software tests that utilize RDMA but also accessing multiple different Network Interface Cards (NIC) that support different RDMA fabrics to gauge any differences there may be between them. The implication for developers is a high entry cost if they want to say that their software works the same with confidently, for example, both Infiniband and Omni-Path fabrics, making writing this flavor of software less accessible to the general public. This very problem is what the OpenFabrics Alliance (OFA) Fabric Software Development Platform (FSDP) was created to solve.

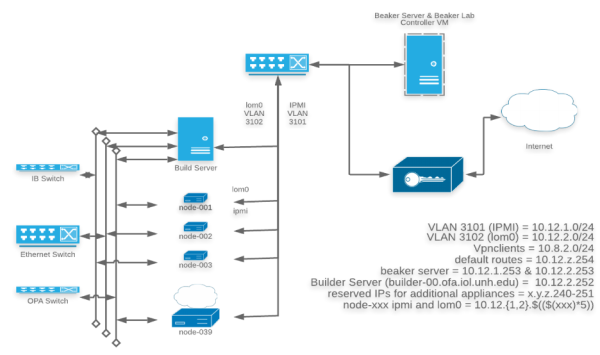

The FSDP provides a cluster of servers featuring various hardware that supports RoCE, iWARP, Infiniband, and Omni-Path fabrics, which we make available to OFA members for either automated testing of a branch on a remote repository or manual testing via SSH access to the cluster. There are currently ten compute nodes (see Figure 1) that users gain root access to, each creating a temporary testing environment where you can install any packages or libraries needed for testing on the underlying hardware and fabrics. In addition to the transient testing environments, there is also a build server where users get access to persistent home directories to build reusable binaries to pass to the testing environments later. Really, the “name of the game” when it comes to the build server is persistence, so you don’t have to recreate the exact same binary over and over on different test nodes. It’s also easy to get these binaries into your testing environments using an HTTP server on the build server that hosts the public_html directory (which can optionally be gated by configurable credentials) inside users’ home directories.

Figure 1: Breakdown of hardware in the FSDP cluster

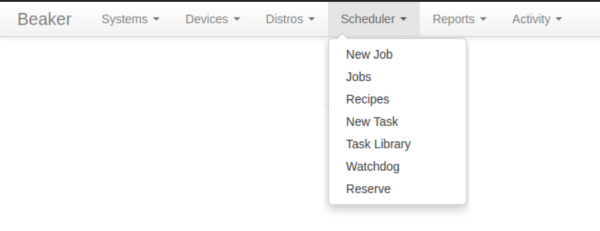

The diverse set of RDMA capable hardware on the cluster is great, but it wouldn’t be helpful if it weren’t easily accessible to users. To address this, we expose all testing environments using an open source tool called Beaker, which is designed to manage automated labs. Beaker allows for the dynamic installation of the operating system of your choice and associated setup scripts on any host you’d like in the cluster. These setup scripts, known as snippets, are configurable on multiple levels (lab-wide, per-operating system, etc.) which allows us to cater the installation process to the user’s selected host and configuration. For example, one of the ways we leverage snippets is by providing automated fabric configuration based on the hardware on a user’s selected host. Additionally, Beaker provides two primary testing methods: automated and manual. The automated process involves writing tasks hosted in a remote repository that Beaker hooks up to and scheduling jobs to run these tasks based on specific events like, for example, new changes being merged onto a specific branch. The manual process, however, is as simple as scheduling a job to reserve a specific host for testing that you can access directly via SSH. Each of the methods have their advantages; the automated testing allows users to run a consistent set of tests with high regularity, whereas the manual testing option allows for highly configurable testing to be run ad-hoc.

Figure 2: Illustration of the different options in the beaker scheduler

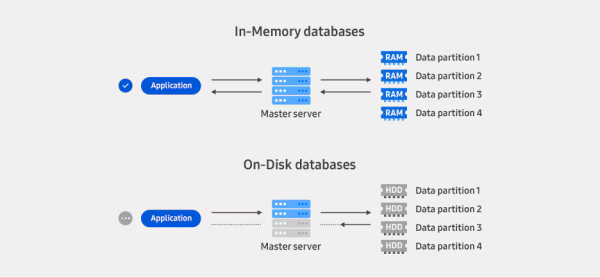

One recent example where this hardware barrier made a real impact was with Redis. Redis is a high-speed in-memory database that’s been rising in popularity. Currently, Redis manages commands being sent to its data structures using the Transmission Control Protocol (TCP), which is known for its reliability and lossless features, but also the slow downs that come with such features. Due to Redis handling its database in-memory, it's easy to see how RDMA would fit right in and potentially provide performance improvements. This idea was put into practice in 2021, when a developer proposed adding RDMA support to Redis.The response to this idea was generally positive, and in 2022 that same developer opened a pull request which would have added the RDMA support. Although the developer spent months implementing the RDMA support between 2021 and 2022, and submitted functional code which was tested on one environment, the pull request still remains open almost 2 years later. There are two primary blockers that have stopped this request from getting merged into the release branch: A lack of RDMA expertise from the maintainers of the Redis project and a lack of diverse testing of the features it provides. Enter the FSDP Cluster...

Figure 3: Difference between in-memory and On-disk databases

So, naturally, I got to work testing the software on the FDSP cluster. To do this, I first grabbed a source RPM for Redis on Fedora 38 (provided in their standard package repositories) and applied the pending upstream patch that added RDMA support to said source code. Then, I modified the spec file to compile with RDMA support before rebuilding the RPM using this newly crafted spec file. I then reserved two connected nodes via the same fabric, grabbed the RPM I had just built from the build server, and installed it. From there, all that had to be done was run the benchmark, and I was able to test Redis's RDMA features. This allowed me to test the support for these features immediately on the different hardware and fabrics supported by the FSDP nodes. Redis is just one of many applications that could benefit from leveraging RDMA but are currently blocked from doing so, partly due to hardware testing barriers. Our goal is to help more developers overcome these barriers in the future through the diverse testing offered by the FSDP cluster.

Learn more about these recent developments in OFA in this exclusive presentation, “How to set up RDMA CI using the FSDP cluster," from the virtual OFA workshop. Presented by Doug Ledford, OFA Chair, Principal Software Engineer at Red Hat, Inc and Jeremy Spewock, UNH-IOL.

References:

- https://github.com/OpenFabrics/fsdp_docs/tree/main

- https://beaker-project.org/docs/

- https://semiconductor.samsung.com/support/tools-resources/dictionary/in-memory-databases/